Hyperautomation – Are AIs coming to steal our jobs?

Ioana Simon - November 25, 2021

Hyperautomation — the word itself sounds futuristic. Images of self-driving flying cars (could we call them self-flying cars?), cyborgs, and autonomous factories come to mind. It seems to tie in with the idea that robots are coming to steal our jobs and feels in some way both scary and promising.

However futuristic it may sound, the word made it to the 2021 Gartner Top Strategic Technology Trends list.

Will humans still make supply chain decisions?

Blog post

In the article, author Kasey Panetta defines hyperautomation as “the idea that anything that can be automated in an organization should be automated.” So the robots are coming to steal our jobs then? Well, this seems to depend on what we define as “things that can be automated”. For example, is it possible to automate complex decision-making?

In a follow-up report entitled Pursuing an Autonomous Supply Chain With Hyperautomation, the word is defined as “the range and combination of advanced technologies that can facilitate or automate tasks that originally required some form of human judgment or action”. The report goes on to say that by ‘tasks’ they mean “not only tasks and activities in the execution, working or operational environment, but also in thinking, discovering and decision-making.” So it seems that yes, decision-making is one of those tasks we want to hand over to AIs.

The goal of hyperautomation, then, seems to be “supply chain autonomy”. But how far this autonomy should actually go is not clear. Human intervention will still be needed, for example in “redefining supply chain strategy” as well as in “controlling AI data and decision-making”. Does this then mean it will still be humans making the decisions?

Blog post

AIs see possibilities that we are unaware of

Blog post

It’s tempting to let advanced technologies make our decisions for us. In many ways they seem much better equipped for decision-making than humans. AIs can incorporate much more data and information into their decision-making processes than humans, allowing them to see possibilities that we cannot. Using all of this data, they can also very effectively predict what may happen in the future. For example, the Gartner report cites Siemens who say that they achieved an increase in productivity worth over €11 million due to advances in predictive demand planning.

Blog post

AIs are not immune to bias

Another issue is that humans are prone to conscious and unconscious biases. The hope is that AIs are independent and objective. In recent years, however, multiple instances have come to light demonstrating that our biases are reflected in the data from which AIs learn, ultimately resulting in biased algorithms. When attempting to automate their hiring process, for example, Amazon developed a machine learning algorithm based on correlating the text in CVs in their database with success in getting hired, to identify promising candidates.

Blog post

The algorithm was never fully deployed because it turned out to be quite sexist. For example, CVs mentioning the word “women” in the context of a candidate’s sports club, or including the names of women-only colleges, were downgraded when it came to technical positions like software engineering. This was because candidates with such CVs were historically disfavored by their human evaluators.

The Gartner report mentions biased data as one of the major risks associated with hyperautomation, concluding that “supply chain employees at all levels must take responsibility — it’s not AI, it’s supply chain people who are liable for the results and impacts, either intended or unintended.” As such, the ultimate responsibility for decisions will rest in human hands, suggesting more of a hybrid approach to automation.

Blog post

Making AIs transparent

I believe that AIs should be respected as the tools that they are. They’re great at analyzing big chunks of data, and coming up with plans we might not have envisaged. But then it must be up to the human to decide whether to follow these plans.

Because of this close interaction between humans and machines, it becomes essential that they understand one another. This is where XAI, or explainable artificial intelligence, comes in. XAI is the idea that AIs should be transparent and able to explain how they come to their conclusions. This can help the human decision-maker evaluate whether the appropriate factors were taken into account.

Stealing what jobs?

There’s a lot to be gained from this cooperation if AIs take over tasks they are better suited for — tasks the human planner might find overly numerical, repetitive, or just plain boring. For one thing, it frees up time and mental effort that could be spent doing more sensible things. According to the Gartner report “Hyperautomation will eventually be at the core of supply chain execution, while the human will be there to drive supply chain strategy, foster innovation, take care of customer service and experience, and control AI data and decision-making.”

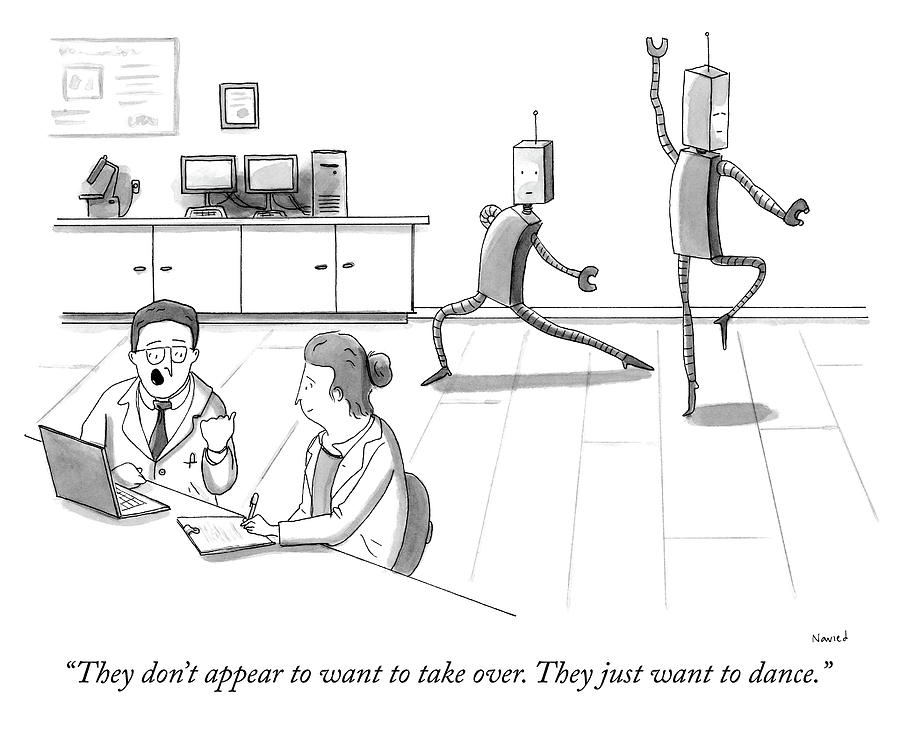

So yes, while AIs might be coming to steal some of our jobs, it’ll only be the ones we’re probably willing to give up anyway.

Want to raise planning agility and make smarter decisions while staying in control of your supply chain? Find out what Unison Planning can do for you.

Cartoon by Navied Mahdavian

Ioana Simon

Supply Chain Consultant

Biography

Ioana is a consultant in the Solver team. There, she primarily works on the advanced S&OP solver and collaborates closely with project teams to ensure customers get the most out of OMP’s vast solver offering. She is passionate about mathematics and dislikes writing her own biography.